For the last year I’ve been learning through failure about SFP+ 10GB networking. SFP+ requires matching the correct module with the correct wire with the correct settings on the networking device. To further complicate this hot mess the cables are not bi-directional so you have to pay special attention to what end you plug in for the magic of 10GB networking to work.

For my 10GB network I’m running the Ubiquiti Unifi Switch 16 XG (16XG). Over the last year I’ve purchased a number of 10GB SFP+ cables and connectors to use with the switch including:

- Ubiquiti UDC-3 10G SFP+DAC 3M (more info)

- Ubiquiti UC-DAC-SFP+ (more info)

- 10Gtek 10GBASE-CU SFP+ Cable .5M (model CAB-10GSFP-P0.5M) (more info)

- SFP-10G-CU3M 10G SPF+ Passive Copper Cable 3M (more info)

- Ubiquiti Multi-Mode SFP+ Fiber Module, 10G model info UF-MM-10G (more info) combined with FS.COM’s 50/125 OM3 Multimode Fiber Patch Cable – 2 M – product ID 41729 (more info)

If you are shopping for new cables, Item 5 is my recommendation. Keep reading for the reason why.

For the rest of this document, I’ll be referring to each cable or cable combo as the number listed above, for example the Ubiquiti UDC-3 10G SFP+DAC 3M will be referred to as Item 1.

I did not run into any glaring problems with this mix of adapters when connected to the 16XG, until I added a VMware vSAN cluster to the network. I use a 3-node VMware ESX 7.01 vSAN cluster running on the VMware-unsupported Intel NUC computing device platform. Each Intel NUC is using a Thunderbolt 3 10GB SFP+ adapter to connect to the switch (QNAP QNA-T310G1S). While each Intel NUC is hardware identical and software identical, one of the three Intel NUCs network connectivity was configured with Number 4 above and the other 2 were configured with Number 5. This was mainly due to budget constraints where I ran out of inventory of number 5 and had the number 4 in a drawer ready to go. 10GB is 10GB right? Boy was I wrong.

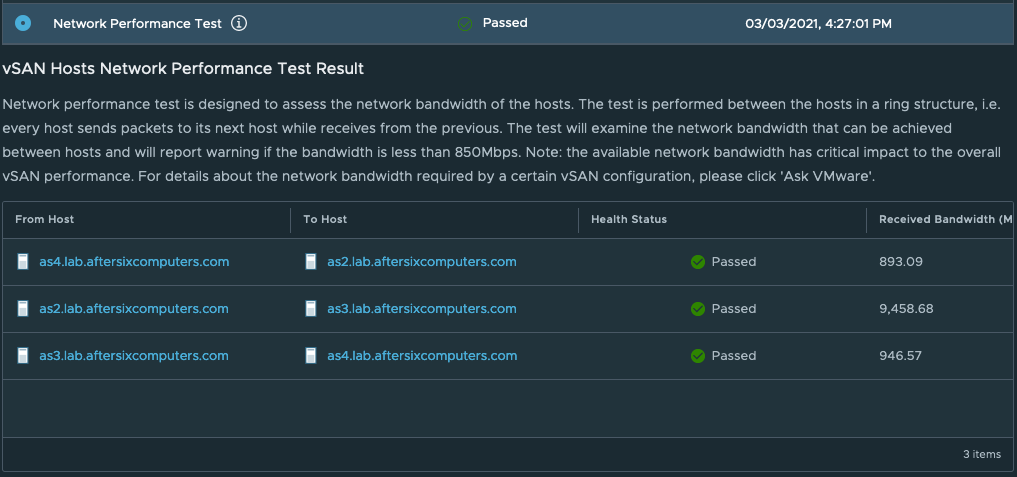

The symptoms I was experiencing involved terrible file transfer speeds and very slow responses from the VM’s running on the cluster along with vMotion events taking a long time. I spent a few weeks troubleshooting the vSAN and ESXi configuration side of this thinking the speeds were likely due to the hard drives or some misconfiguration on my part. When I exhausted that route of troubleshooting I started thinking about hardware differences, the network cable being the only difference. I started simple and ran the included vSAN Host Network Performance Test. It’s the last column in the screenshot that had me concerned:

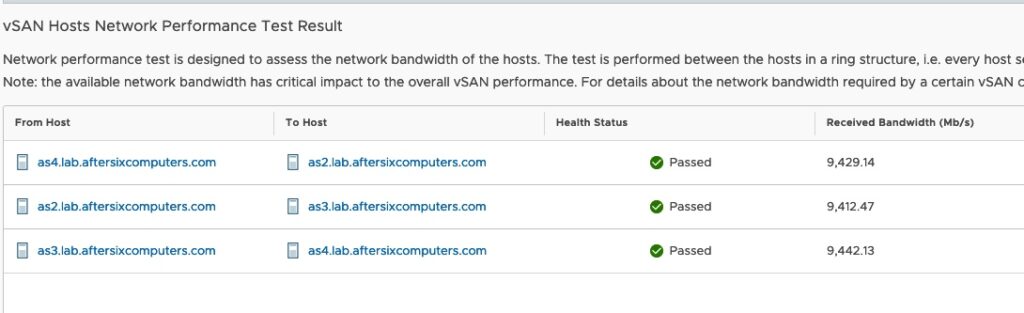

I broke out iPerf and began running a number of other tests and kept coming up with the same problem: something wrong with the connection from AS2, the single device running the Number 4 network configuration. So I broke out the wallet a purchased another set of Number 5, waited a week for the parts to arrive and swapped out the cable. I re-ran the test and my jaw dropped:

I re-ran the iPerf tests and was pleased to see all 3 nodes in the vSAN cluster now exhibit the near 10GB speeds I paid for in the first place.

Over the next few weeks, mostly due to Covid related shipping delays, I removed all but Number 2 and Number 5 from the network and the network has never run faster.

P.S. When replacing the cables, I kept receiving RX errors in the Unifi Network Controller. It turns out I had the fiber cables plugged in the wrong direction. Make sure you have your ends properly matched to avoid this.